- Joined

- Mar 7, 2008

https://morethanmoore.substack.com/p/intel-foundry-realigning-the-money

Intel held an investor focused webinar on 2 April but there are some interesting bits of tech-related info from it.

18A will ramp in 2025, counting significant revenue 2026. This is similar to TSMC, where they'll introduce a node and it can be ball park a year before it hits High Volume Manufacturing (HVM).

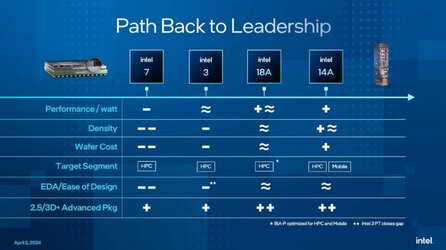

This slide shows where Intel sees its process vs competition at the time of offering, not based on like for like node. If you wonder where 4 and 20A are, they're not listed since they're primarily used internally. These listed ones will be the ones offered for anyone to use.

7 is rated as behind because if you look at AMD and nvidia, they're already using N5 class for consumer products. 3 closes the gap, but by the time Intel shifts volume of it, TSMC has been shipping N3 for some time. 18A is where they think they'll match or take the lead and clearly pass with 14A.

Intel's next desktop gen CPU core dies will be made on 20A, which can be seen as an early version of 18A, so that should at least be generally competitive going against Zen 5 which is expected to be on TSMC 3nm class.

It is interesting to note they see costs going down as we move to the newer EUV nodes. Don't expect that to mean cheaper products though, as it'll more likely be used to restore their profitability which isn't so strong at the moment.

Intel thinks they're at a peak on external wafers (TSMC?) and expect to bring more back internally going forwards. Currently around 30% of wafers are external.

Of course, all this depends on Intel executing to their plan. They are getting access to ASML's newest toys first so they can get a lead against TSMC from that. TSMC's head has previously played down Intel's claimed future performance.

Intel held an investor focused webinar on 2 April but there are some interesting bits of tech-related info from it.

18A will ramp in 2025, counting significant revenue 2026. This is similar to TSMC, where they'll introduce a node and it can be ball park a year before it hits High Volume Manufacturing (HVM).

This slide shows where Intel sees its process vs competition at the time of offering, not based on like for like node. If you wonder where 4 and 20A are, they're not listed since they're primarily used internally. These listed ones will be the ones offered for anyone to use.

7 is rated as behind because if you look at AMD and nvidia, they're already using N5 class for consumer products. 3 closes the gap, but by the time Intel shifts volume of it, TSMC has been shipping N3 for some time. 18A is where they think they'll match or take the lead and clearly pass with 14A.

Intel's next desktop gen CPU core dies will be made on 20A, which can be seen as an early version of 18A, so that should at least be generally competitive going against Zen 5 which is expected to be on TSMC 3nm class.

It is interesting to note they see costs going down as we move to the newer EUV nodes. Don't expect that to mean cheaper products though, as it'll more likely be used to restore their profitability which isn't so strong at the moment.

Intel thinks they're at a peak on external wafers (TSMC?) and expect to bring more back internally going forwards. Currently around 30% of wafers are external.

Of course, all this depends on Intel executing to their plan. They are getting access to ASML's newest toys first so they can get a lead against TSMC from that. TSMC's head has previously played down Intel's claimed future performance.