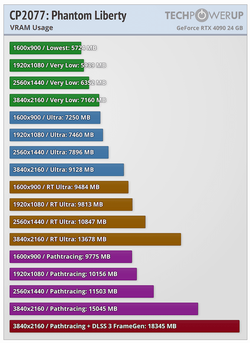

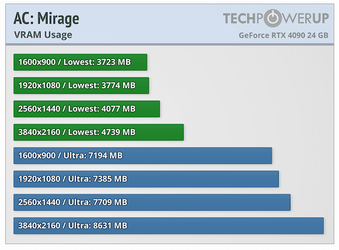

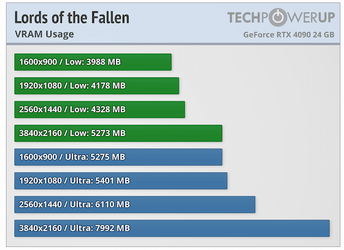

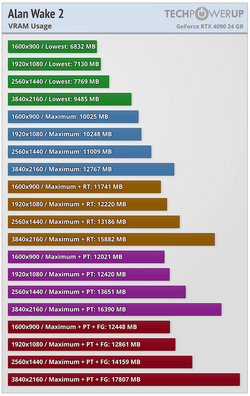

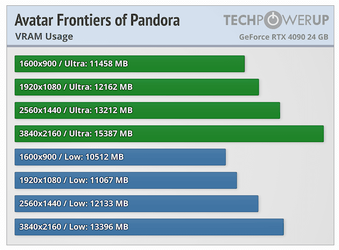

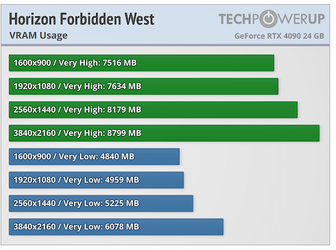

I mean..... it depends. It's not black and white. There are plenty of titles where 8GB is eclipsed at 4K. That said, not all titles that breach your vRAM capacity respond the same way. Some titles allocate what it can, but may not use it all, so a card with lesser capacity may still perform well. Others need everything it allocates and takes a hit when passing that mark. So while 'everything else' is a big problem, vRAM is, without a doubt a valid consideration. 8GB vs. 12GB could be the difference between playable and not in specific titles. The higher the res and the higher the setttings you want, the more potential to go past that amount. For giggles, I took screenshots from TPU's recent game reviews that show vRAM use. I went back to 2023 skipping only CS2 b/c, well, that looks like my kid drew it and not made for beauty, but tick rate.

At 4K, there's no way I'd go less than 12GB today for best results over the lifespan of the card and assuming you're shooting for 'ultra' gaming. YMMV, of course, depending on what titles you play, the settings, and the basic horsepower of the card in the first place, but me, I'm going higher than 8GB for the games I play..... if I played at 4K (or even 1440p).