- Joined

- Mar 7, 2008

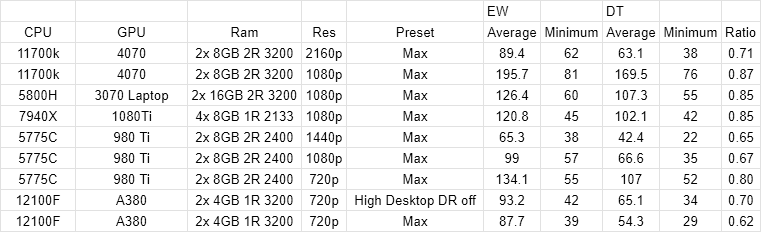

It has just been announced the FFXIV Dawntrail benchmark will drop soon, 14 April 00:00 PDT or 07:00 UTC. This is the upcoming expansion with a graphical update to the game. I intend to test it vs the current Endwalker benchmark.

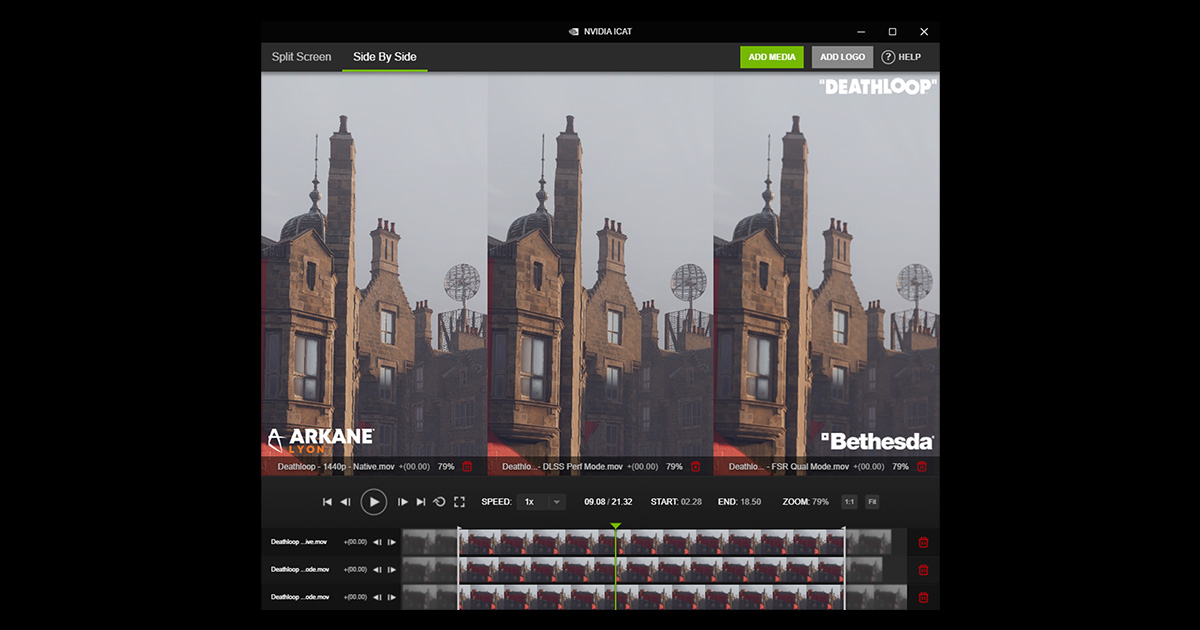

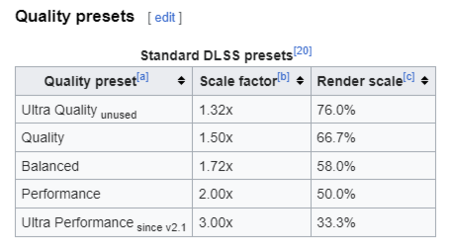

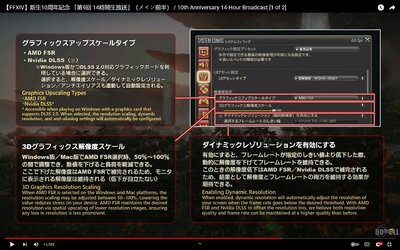

There are updates to the game's graphics affecting lighting/shadows, textures and likely more. Technically it also introduced upscaling support in the form of FSR 1.0 and DLSS 2.0. FSR will be enabled by default. The user has access to a render scale slider of 50% to 100%. Presuming 100% means native rendering. Does FSR1 do anything else when not scaling? There seems to be a separate AA setting shown elsewhere.

There is a dynamic resolution setting. The option shown in the screenshot says it can be enabled if it drops below 60fps. It wasn't shown what other settings there may be other than off. The benchmark has the same settings as the game so we should be able to find out.

DLSS support is implemented in an interesting way. It's on or off only. No user control beyond that. Also it is tied to the dynamic resolution setting. I think it would be nice to have user control over the settings, but let's see how it works in practice.

One concern I have is that in the existing game, there are many situations where the fps tanks because of CPU limits, not GPU. Typically this will be in areas with a high player count, like cities or hunt trains. Is it smart enough to recognise that this can't be helped by dropping graphical settings? This can't be testing in benchmark directly, but it might be possible by lowering available CPU resources to simulate that effect.

I was hoping this would be a simple run benchmark, get number, but with these settings there's going to be a lot more to explore. I think runs with FSR 1 at 100% scale and dynamic resolution disabled will be a starting point, but the performance-quality tradeoffs from upscaling will certainly be of interest.