- Joined

- Jul 20, 2002

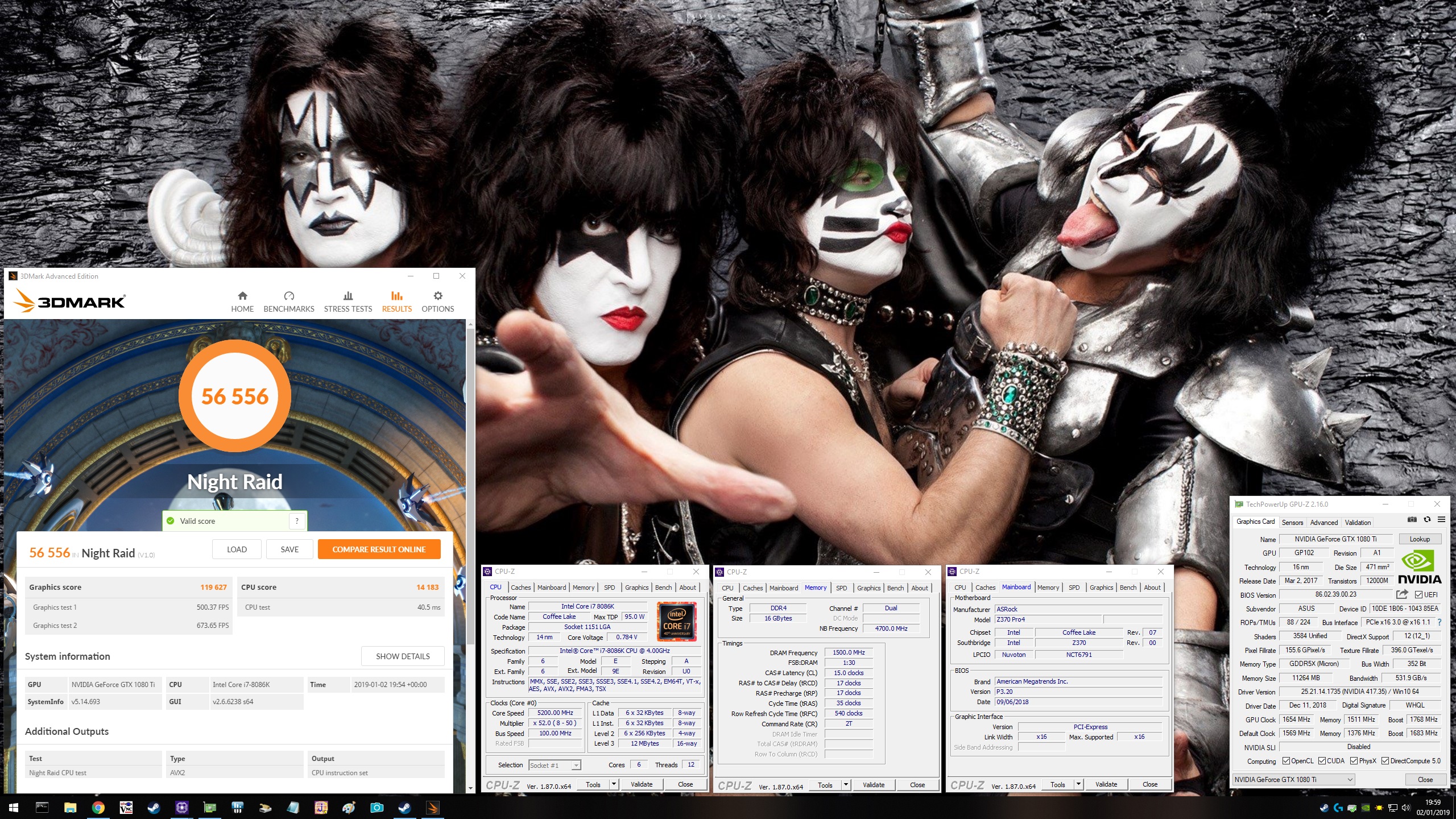

I used to wait until I could get a videocard that was at least 100% faster just so I'd see some real gains in perf. but that was back when videocards were still relatively cheap from a price/performance ratio. I waited nearly 5 years to upgrade my 1080ti but only because its performance deficits were really noticeable in Metro: Exodus and I had no RT acceleration. I'm not considering upgrading anything ATM because of financial constraints, but what are some good videocard upgrade strats people are using following post-cryptocurrency price inflation? I'm looking for ideas on whether or not to immediately sell what you have and move on up when the next-gen videocards are released, or to hold onto to what you've got until you have no choice but to upgrade or to maybe wait until the halo model nextgen cards come out.